|

||||||

| home research publications people open positions contact | ||||||

|

||||||

|

Automated Recognition and Generation of Movement Paths that Communicate Emotion

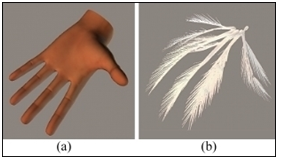

Figure: Two visitors immersed in Hylozoic Series, a responsive architectural geotextile environment. b) Hylozoic Soil consists of layers of mechanical fronds and whiskers that move in response to the human occupants. Reprinted with permission. Body language and movement are rich media for communicating and perceiving emotional state. Motion may be more expressive and subtle than language or facial expression, which are easier to consciously suppress or control. In our research, we are interested in 1. understanding which properties of motion convey emotional content, 2. developing computational models for automatic recognition of emotions from motion, 3. developing computational model for automatic generation of motion paths to convey specific emotions, 4. adapting pre-defined motion paths in order to overlay emotional content, and 5. studying the impact of the moving structure's appearance, kinematics and dynamics on the conveyance of emotion through motion. Therefore, our research aims at developing a systematic approach for automatic identification of the movement features most salient to bodily expression of emotion and using them to propose new computational models for affective movement recognition and generation on anthropomorphic and non-anthropomorphic structures. Using motion capture, custom computational models, and animation software, we have developed a system for automatic recognition of emotions encoded in an affective hand movement dataset. The model can also be used to display automatically-generated motion paths which successfully convey emotional content. The effectiveness of the models has been confirmed through user studies, in which participants rate the emotional content in animations of the original and regenerated motion paths. Specifically, the algorithms can 1. successfully classify a limited set of captured movements into the emotional categories happy, sad, and angry; 2. extract the key subset of low-level motion capture data that is important to the communication of emotional state; and 3. regenerate the original motion paths without compromising the emotional content, from this limited subset of the original data. For example, using motion capture data, we have investigated how both original and regenerated affective movement is perceived on different structures, such as an animation of a human hand and a fern-like structure.  Figure: Structures used to display expressive movements. a) anthropomorphic (human-like) hand model, b) non-anthropomorphic fern-like structure. Example of angry movement regenerated on the fern-like structure: Videos of the different motion and structure combinations can be viewed here. The next steps in this research are to develop a mapping from the key low-level motion features to a high-level description of the motion, and effectively invert that mapping in order to allow high-level emotional descriptions to be "overlaid" on an arbitrary motion path. Applications of this technology include: - the design of physical elements of interactive structures such as the"Hylozoic series, which will enhance our ability to control the visitor experience, - the design of more effective service robots which might recognize the emotional state of a human and be able to use emotional display to better respond accordingly, - computer animation tools which might allow the animator to apply “emotional filters” to animated characters’ motion paths in the same way one can apply visual filters to computer images. This work is a collaboration with PBAI and Prof. SarahJane Burton at Sheridan College. |

||||||

|

Researchers: Ali-Akbar Samadani, Mo Memarian, Rob Gorbet, Dana Kulić Key Publications: A. Samadani, A. Ghodsi and D. Kulić, Discriminative Functional Analysis of Human Movements, Pattern Recognition Letters, 2013, In Press. link A. Samadani, R. Gorbet and D. Kulić, Gender differences in the Perception of Affective Movements, International Workshop on Human Behavior Understanding, pp. 65 - 76, 2012. pdf A. Samadani, E. Kubica, R. Gorbet and D. Kulić, Perception and Generation of Affective Hand Movements, International Journal of Social Robotics, Vol. 5, No. 1, pp. 35 - 51, 2013. link A. Samadani, B. J. DeHart, K. Robinson, D. Kulić, E. Kubica and R. B. Gorbet, A Study of Human Performance in Recognizing Expressive Hand Movements, IEEE International Symposium on Robot and Human Interactive Communication, pp. 93-100, 2011. pdf | ||||||