|

||||||

| home research publications people open positions contact | ||||||

|

||||||

| Incremental Learning for Humanoid Robots This work was initiated at the Nakamura Yamane Lab at the University of Tokyo, working with Professor Yoshihiko Nakamura. The aim of this research is to develop algorithms for life-long, incremental learning of human motion patterns for humanoid and other robots. We are developing incremental algorithms for automatically segmenting, clustering and organizing motion pattern primitives, which are observed from human demonstration. These algorithms can be applied to learn human motion for activity recognition during human-robot interaction, progress monitoring during rehabilitation and sports training, or skill transfer for automation. The motion primitives are represented as stochastic Markov-based models, where the size and structure of the model is automatically selected by the algorithm. |

||||||

|

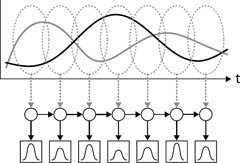

First, motions are segmented using a variant of the Kohlmorgen-Lemm stochastic segmentation approach. An HMM is built over a sliding set of windows, and the Viterbi algorithm used to generate the optimum state sequence representing the segmentation results. Segmented motions are then passed to an on-line, incremental clustering algorithm, which incrementally builds a tree structure representing a hierarchy of known motions. |

|||||

|

||||||

| The motions abstracted by the incremental clustering algorithm are then used to further improve the segmentation result by adding known motions. In this way, both the segmentation and the clustering performance improves over time, as more motions are observed. The resulting tree structure is dependent on the history of observations of the robot, with the most specialized (leaf) nodes occuring in those regions of the motion space where the most examples have been observed. | ||||||

|

The algorithm has been tested on a large dataset of continuous motions. Data is first collected from a motion capture studio. The raw marker data is then converted to joint data for a 20DoF humanoid using online inverse kinematics. This continuous sequence of joint angle data is then used as input into the segmentation and clustering algorithm. - small data set:

Right Arm Raise, Left Arm Lower, Kick Extend, Squat Retract |

|||||

|

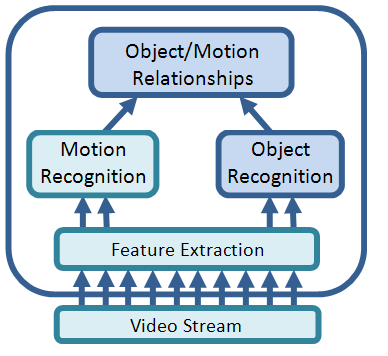

Learning to handle objects The goal of this research is to develop a robot learning system capable of learning to perform actions on objects in its environment. Learning should be accomplished through extracting the underlying structure of what has been observed in order to allow generalization to different situations. This requires appropriate knowledge abstraction and representation.

The input to the system is a video stream of a human demonstrating the task to be learned. Basic computer vision techniques are used to extract object locations and other object features from video streams. The object locations form motion trajectories used for motion recognition. These motion trajectories are segmented into motion primitives and motion models are trained on the motion primitives. By clustering motion models, .e.g. using Affinity Propagation (AP), it is possible to perform motion recognition, i.e. determining if a motion is similar to previously observed motions or not. The object features are used for object recognition via machine learning techniques such as Self-Organizing Maps (SOMs). It is also necessary to determine the different states, e.g. locations and configurations, the objects can take. Once the motions and objects have been recognized, the relationship between the motions and objects will be modeled at the highest level of the hierarchy in order to create a continuous model of the task flow.

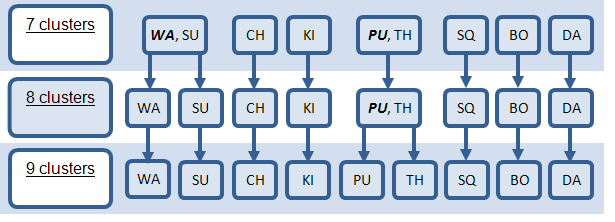

So far, the motion recognition system has been tested on a small dataset of four different motion types with multiple exemplars of each motion type shown in video streams; the motion recognition system is able to obtain perfect clustering results. When tested on an existing, larger dataset of nine different motion types and multiple exemplars of each motion type, again, perfect clustering results are obtained. Furthermore, a coarse and fine distinction can be made between motions that are more similar to each other than others. Below is a tree showing the clustering results for different levels of distinction. In the 7 cluster case, walking (WA) and sumo leg raising (SU) are placed in one cluster. Punching (PU) and throwing (TH) is also placed in one cluster. This is reasonable because these motions are more similar to each other than other motions. At 9 clusters, all the motions are separated correctly into their motion types.

Figure: Clustering results of larger dataset using DMPs and AP. The total number of clusters found is indicated at the beginning of each row. The types of motions contained in each cluster are indicated through their labels, e.g. WA = walking, SU = sumo leg raising, CH = cheering etc. | ||||||

|

Researchers: Jane Chang, Dana Kulić Key Publications: D. Kulić, C. Ott, D. Lee, J. Ishikawa and Y. Nakamura, Incremental learning of full body motion primitives and their sequencing through human motion observation, International Journal of Robotics Research, Vol. 31, No. 3, pp. 330 - 345, 2012. link D. Kulić, W. Takano and Y. Nakamura, On-line segmentation and clustering from continuous observation of whole body motions, IEEE Transactions on Robotics, Vol. 25, No. 5, pp. 1158 - 1166, 2009. link | supplementary material D. Kulić, W. Takano and Y. Nakamura, Incremental Learning, Clustering and Hierarchy Formation of Whole Body Motion Patterns using Adaptive Hidden Markov Chains, International Journal of Robotics Research, Vol. 27, No. 7, pp. 761 – 784, 2008. link | ||||||