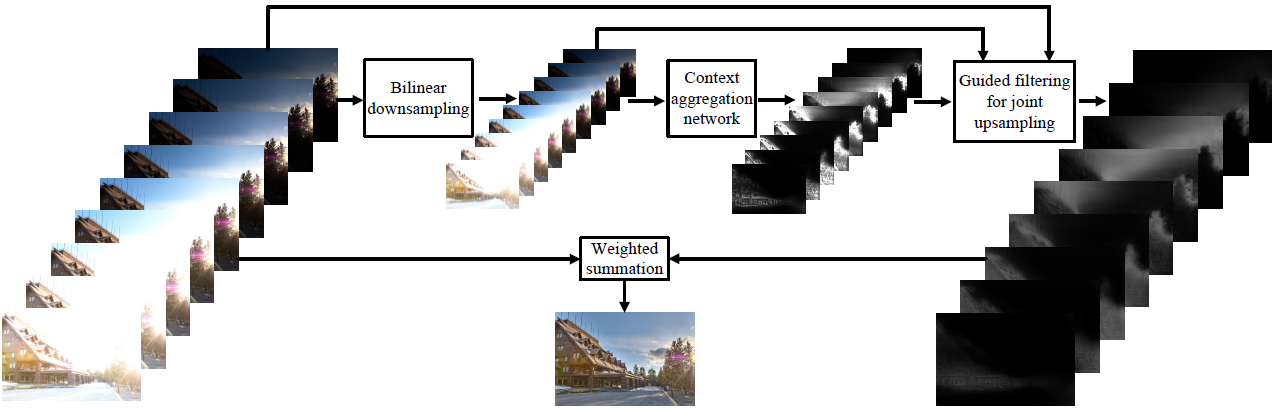

We propose a fast multi-exposure image fusion (MEF) method, namely MEF-Net, for static image sequences of arbitrary spatial resolution and exposure number. We first feed a low-resolution version of the input sequence to a fully convolutional network for weight map prediction. We then jointly upsample the weight maps using a guided filter. The final image is computed by a weighted fusion. Unlike conventional MEF methods, MEF-Net is trained end-to-end by optimizing the perceptually calibrated MEF structural similarity (MEF-SSIM) index over a database of training sequences at full resolution. Across an independent set of test sequences, we find that the optimized MEF-Net achieves consistent improvement in visual quality for most sequences, and runs 10 to 1000 times faster than state-of-the-art methods.

@article{ma2019mefnet,

title={Deep Guided Learning for Fast Multi-Exposure Image Fusion},

author={Ma, Kede and Duanmu, Zhengfang and Zhu, Hanwei and Fang, Yuming and Wang, Zhou},

journal={IEEE Transactions on Image Processing},

volume={29},

number={},

pages={2808-2819},

year={2020}

}